Are we going to see lidar technology everywhere? It’s a future to look forward to!

When launching the iPhone 12, Apple enthusiastically showed off the functionality of lidar touch. The company claims that lidar will strengthen the iPhone’s camera with faster focus, especially in low light. Lidar may even pave the way for a series of more complex augmented reality apps on the iPhone 12 and more likely the next generation of “apples”.

The launch isn’t about how lidar works, but it’s not the first time Apple has put lidar in its devices; This technology came with the iPad, released in March. Although not many people can use the iPhone to perform abdominal surgery, we are still quite familiar with lidar through the iPad.

“The locomotive” Apple called the name of lidar technology, which smartphone company will continue to respond?

Lidar works by shooting a laser out of the environment, measuring the time it takes for the laser to bounce back to the light sensor in order to calculate the exact distance. Since the speed of light is such a constant, it is not difficult for a sensor to measure distance. By continuously using lidar to fire a series of these lasers into the surrounding area and form a 2D grid over the objects in view, the system creates a 3D map showing the location of the current object. property in the measured space.

A report released by System Plus Consulting in June indicated that the iPad lidar projects light into the environment through a series of vertical cavity surface emitting lasers (VCSELs). »Developed by Lumentum. Soon the system will detect the bounce rays with a sensor strip called a “Single Photon Avalanche Diode (SPAD)” made by Sony.

The combination of VCSEL and SPAD is notable for its ability to create revolutionary lidars for the automotive industry. One of the strengths of VCSEL and SPAD is the simple way of manufacturing it, just the usual technique of manufacturing semiconductors. As a result, both systems benefit greatly from the current size of the semiconductor industry. Sensor using VCSEL more widely, the price will be cheaper and the quality will be better.

VCSEL will fire lasers like this.

Ouster and Ibeo, two high-quality lidar companies using VCSEL, are the two brightest names among the large number of companies pursuing lidar technology. Apple’s use of lidar in its new products will create two waves:

Other smartphone companies will follow in Apple’s footsteps, lidar technology will be more popular than ever.

It will be a favorable wind that will blow the sails of Apple’s development boat, both technological and profitable.

VCSELs Help Apple Create Lidars Leaner Than Before

Over ten years ago, Velodyne announced the world’s first three-dimensional sensor. This rotary device costs up to $ 75,000 and is not very modest in size. Obviously, to integrate lidar into a smartphone, Apple will need much smaller and cheaper lidars; it was the VCSEL system that helped Apple achieve its wish.

Velodyne’s old lidar design.

What is VCSEL? If you are using conventional semiconductor fabrication techniques to build a laser firing system, you have two options. One is to shoot lasers from the edge of the wafer (also known as the edge laser technique), the other is to shoot lasers from the top surface of the device (also known as the name of vertical laser emitting surface holes, VCSEL).

Historically, the laser emitted from the wafer side has been more powerful. Although VCSEL is still used in the manufacture of electronic devices, such as optical mice or fiber optic internet equipment, VCSEL is dishonored by its inability to be applied to devices requiring large amounts of light. However, VCSEL has quietly grown even stronger over time.

To create a laser emission system from the edge, the manufacturer will have to split the wafer and expose the emitter. Therefore, the manufacturing process will be more complicated and expensive, while also limiting the number of laser emitters per wafer. In contrast, VCSEL emits light perpendicular to the wafer, so there is no need to separate between the wafer.

In edge laser generation, light is returned back and forth to the material wall before being projected.

This means that a single silicon chip can accommodate tens, hundreds, or even thousands of VCSELs. Essentially, when mass produced, the cost of building a chip that contains thousands of VCSELs is only a few dollars.

The story revolves around single-photon cascade diodes (SPADs). As the name suggests, SPAD is so sensitive that it can detect a single photon. The high sensitivity means it detects too much “dirt”, so efficient data processing systems are needed to apply SPAD to the lidar system. Just like VCSEL, the strength of SPAD lies in its simplicity of production; A small chip can contain thousands of SPADs.

By combining VCSEL and SPAD together, we are achieving more advanced lidar designs than ever before. The old Velodyne lidar carried 64 laser firing systems and a column of gimbal rotations. Each unique laser system will be associated with a receiver. The complicated design, along with the need to precisely place the laser path with each laser ID sensor, made Velodyne’s lidar extremely expensive.

In recent years, some companies have experimented with adding a series of tiny mirrors to drive lasers. Although the new design requires only one laser emission system, the lidar still contains moving parts, so the researchers have not yet optimized the lidar.

In contrast, Apple, Ouster, and Ibeo have collaborated to make lidar sensors without the need for moving parts. Using hundreds to thousands of lasers fired from a single chip, lidar with VCSEL can use each laser to shoot at a particular point in the lidar line of sight. And since all lasers fit on a single chip, the assembly isn’t as heavy as Velodyne’s rotating design.

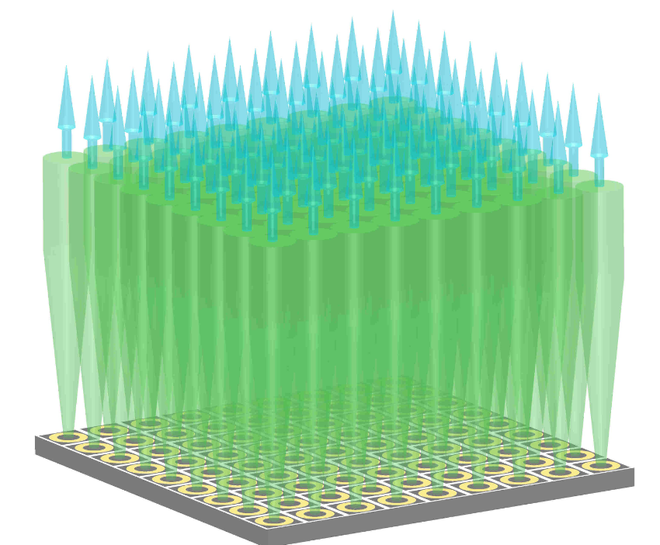

The drawing represents what the lidar system will “see”.

New iPhones use a 3D sensor called a TrueDepth camera, which allows Apple’s FaceID feature to work properly. It also uses a range of VCSELs produced by Lumentum. TrueDepth works through a 30,000 point grid that covers the surface of an object, to identify three-dimensional shapes present in the measured space. Thanks to this ability, he can clearly “see” where your face is protruding (nose, lips, eyes, …) and where it is (dimples, nostrils, …).