The following brief computer history summarizes the stages in the evolution of computers, from humble beginnings to the machines we use today to surf the Internet, play games, and stream multimedia, in addition to functionality. Super speed calculation.

Computers weren’t meant to be entertained or to send emails, but to solve a serious crisis involving super-fast computing. By 1880, the American population had grown so large that it took over seven years to compile the census results. The government was looking for a faster way to get things done, by creating punch card computers that took up the surface of an entire room.

The following brief computer history summarizes the evolutionary stages of computers, from humble beginnings to the machines we use today for surfing the Internet, playing games, and streaming media, in addition to functionality. Super speed calculation.

1801: In France, Joseph Marie Jacquard invented a loom that uses perforated wooden labels to automatically weave fabric patterns. The original computer model used punch cards in the same way.

1822: English mathematician Charles Babbage “conceives” the idea of a steam-driven computer that could calculate tables of numbers. The project, funded by the British government, subsequently failed. However, over a century later, the world’s first computer was built. According to Wikipedia, Charles Babbage was the “father of the computer,” whose idea of the computer is closest to what we see today.

1890: Herman Hollerith designs a punch card system to calculate the 1880 census, accomplishes the task in just three years, and saves the government $ 5 million. Today he founded the predecessor company of the IBM group.

1936: Alan Turing introduces the concept of a universal machine, later known as the Turing machine, capable of computing anything that can be calculated. The basic concept of the modern computer is based on this idea.

1937: J.V. Atanasoff, professor of physics and mathematics at Iowa State University, attempted to build the first computer without gears, belts, or shafts.

1939: The Hewlett-Packard Company is founded by David Packard and Bill Hewlett in a garage in Palo Alto, California (according to the Computer History Museum).

1941: Atanasoff and his student, Clifford Berry, design a computer capable of solving 29 equations simultaneously. This is the first time that a computer can store information on its main memory.

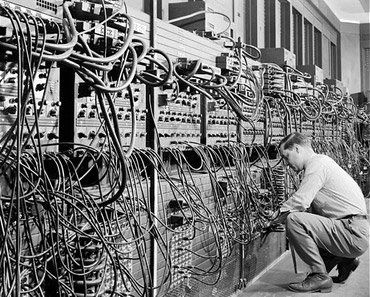

1943 – 1944: Two professors from the University of Pennsylvania, John Mauchly and J. Presper Eckert, build the Electronic Digital Calculator and Integrator (ENIAC). Considered the “grandfather” of modern digital computers, its massive body occupies a room measuring 6x12m, comprising 40 shelves 2.4 meters high and 18,000 vacuum tubes. It is capable of processing 5,000 operations per second and operates faster than any previous device.

1946: Mauchly and Presper leave the University of Pennsylvania and receive Census Bureau funding to build UNIVAC, the first commercial computer for business and government applications.

1947: William Shockley, John Bardeen and Walter Brattain of Bell Laboratories (Bell Laboratories) invent the transistor. They discovered how to make an electric switch with solid materials and without vacuum.

1953: Grace Hopper develops the first, the last, computer language called COBOL. Thomas Johnson Watson Jr., son of IBM CEO Thomas Johnson Watson Sr., designed the IBM 701 EDPM to help the United Nations track North Korea during the war.

1954: The FORTRAN programming language, short for FORmula TRANslation, was developed by a group of IBM programmers led by John Backus (according to the University of Michigan). Also this year, the massive SAGE computer defense system is designed to help the Air Force track radar data in real time. The product is equipped with technical advancements such as modem and graphic display. The system weighs up to 300 tonnes and occupies an entire room.

1958: Jack Kilby and Robert Noyce introduce integrated circuits, called computer chips. Kilby received the 2000 Nobel Prize in Physics for his work.

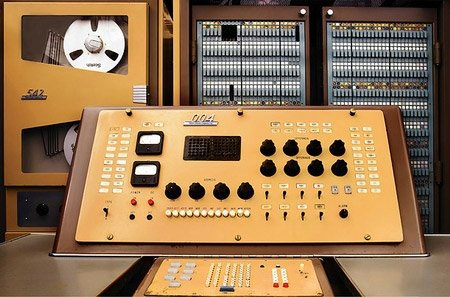

1960: NEAC 2203 is manufactured by Nippon Electric Company (NEC) and is one of the first solid-state computers in Japan. They are used in the fields of business, science and engineering.

NEAC 2203

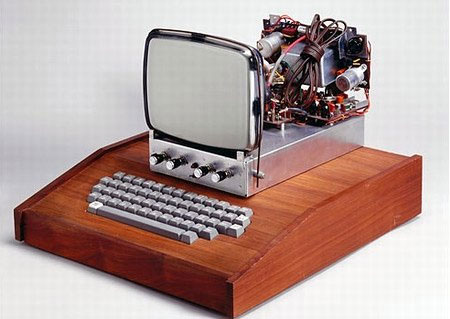

1964: Douglas Engelbart presents the prototype of a modern computer, with a mouse and a graphical user interface (GUI). This marked the evolution of the computer from a machine dedicated to scientists and mathematicians to a technology more accessible to the general public.

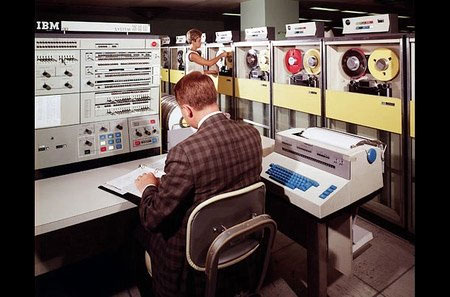

1964: The same year the IBM System / 360 was introduced, it was the first computer to control the full range of applications, from small to large, from commercial to scientific. Users can enlarge or reduce their settings without having to worry about upgrading the software. The high-end System / 360 models have played an important role in NASA’s Apollo spacecraft missions as well as in airflow monitoring systems.

1964: The CDC 6600 was designed by computer architect Seymour Cray, it was once the fastest machine in the world. The product remained “champion of speed” until 1969 when Cray designed its next supercomputer.

Calculator CDC 6600

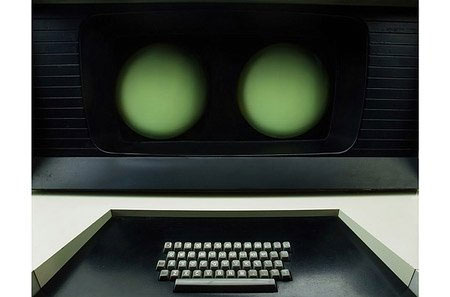

1965: DEC PDP-8 is manufactured by Digital Equipment Company (DEC) and is the first successfully marketed minicomputer. When it first hit the market, the DEC PDP-8 sold over 50,000 units. They can do all the work of a mainframe but only cost around $ 16,000 while IBM’s System / 360 goes up to hundreds of thousands of dollars.

DEC PDP-8 Calculator

1969: A team of developers from Bell Labs builds UNIX, an operating system that resolves compatibility issues. Written in the C programming language, UNIX is portable across multiple platforms and is becoming the operating system of choice in many large businesses and government organizations. Due to the slowness of the system, UNIX never appeals to home PC users.

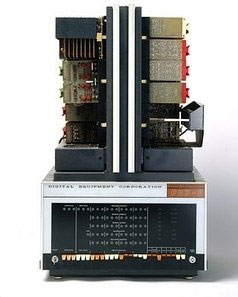

1969 also marked the introduction of the Interface Message Processor (IBP) which featured the first generation of gateways and today known as routers. As such, IMP performs important tasks in the development of the world’s first packet switched network (ARPANET) and is the predecessor of today’s global Internet.

1970: New Intel Corporation announces the Intel 1103, the world’s first dynamic access memory (DRAM) chip.

1971: Alan Shugart leads a team of IBM engineers who invented a “floppy” that allows data to be shared between computers. At this time, the Kenbak-1 was born. The products were considered the world’s first personal computer and touted as an easy-to-use educational tool, but they failed to sell just over a dozen. Without a CPU, they only have a computing power of 256B and their output is just a series of flashing lights.

1973: Robert Metcalfe, member of the Xerox research team, develops Ethernet to connect many other computers and hardware.

1974 – 1977: Several personal computers hit the market, including the Scelbi & Mark-8 Altair, IBM 5100, TRS-80 from Radio Shack (informally known as “Trash 80”) and PEToreore.

1975: The January issue of Popular Electronics magazine describes the Altair 8080 as “the world’s first mini-computer”. Two geeks, Paul Allen and Bill Gates, offered to write software for Altair, using the new BASIC language. On April 4, after the success of this first attempt, two childhood friends created their own software company, Microsoft.

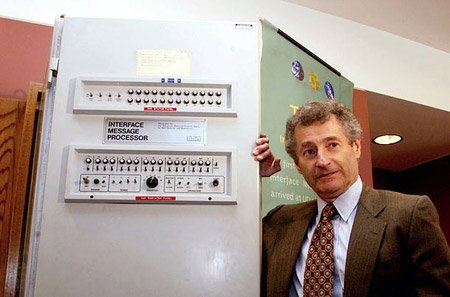

1976: Apple I was designed by Steve Wozniak but rejected by his boss at HP. Undeterred, he supplied them to the Homebrew Computer Club in Silicon Valley and, along with his friend Steve Jobs, managed to sell 50 pre-designed models at the Byte Shop in Mountain View, Calif., For around $ 666. Steve Jobs and Steve Wozniak laid the groundwork for the birth of the Apple computer on April Fool’s Day. Apple I was the first computer with a single circuit board (according to Stanford University).

Apple Computer I

1976: Cray-1 was released, at that time Cray-1 was the fastest computer in the world. Although the price is around $ 5-10 million, it still sells well. They are part of the products designed by IT architect Seymour Cray. He dedicated his life to building so-called supercomputers.

Cray Calculator-1.

1977: Radio Shack TRS-80’s first production was only 3000 and was selling very well. For the first time, non-geeks can write programs and control the computer to do whatever they want.

TRS-80 Calculator

1977: Jobs and Wozniak merge Apple and launch the Apple II at the first West Coast Computer Faire technology show. Apple II offers color graphics and incorporates an audio cassette player for storage.

1978: Accountants applaud the introduction of VisiCalc, the first computerized spreadsheet.

1979: Word processing became a reality when MicroPro International released Wordstar. “The most significant change is the addition of margins and the word wrap property (lengthening words without breaking the layout),” says Rob Barnaby, creator of VisiCalc. Other changes include the removal of ordering methods and the addition of printing functionality.

1981: IBM’s first personal computer, called “Acorn”, is introduced. Acorn uses Microsoft’s MS-DOS operating system, has an Intel chip, two floppy drives and an optional color display. Sears & Roebuck and Computerland sold these computers, marking the first time that computers were available from outside distributors. It also made the term PC popular.

1981: The Osborne 1 mobile computer is the first mobile computer released, weighing 10.8 kg and costing less than $ 2,000. They have become popular due to their low cost and a large library of software included.

Osborne Laptop 1.

Osborne Laptop 1.

1983: Apple’s Lisa is the first personal computer with a graphical interface. Lisa also has a drop-down menu and icons. It failed but eventually moved on to the Macintosh. The Gavilan SC was the first laptop to have a familiar flip form factor and the first to be marketed as a “laptop”.

1983: Hewlett-Packard 150 is born, which represents the first step in the expansion of technology today. The HP 150 is the first computer on the market with touch screen technology. The 9-inch touchscreen of the product is equipped with infrared receivers and transmitters that surround it to detect the position of the user’s fingers.

Hewlett-Packard 150 computers.

1985: Microsoft announces Windows (Encyclopedia Britannica). It’s the company’s response to Apple’s GUI. Commodore announced the Amiga 1000, which offers advanced audio and video functionality.

1985: The first .com domain was registered on March 15, years before the World Wide Web marked the official beginning of Internet history. Symbolics Computer Company, a small Massachusetts computer manufacturer, registered Symbolics.com. More than two years later, only 100 .com domains have been registered.

1986: Compaq releases the Deskpro 386. Its 32-bit architecture offers speeds comparable to those of a mainframe.

1990: Tim Berners-Lee, researcher at CERN, the high energy physics laboratory in Geneva, develops HyperText Markup Language (HTML), creates the World Wide Web.

1993: The Pentium microprocessor promotes the use of graphics and music on PCs.

1994: PC becomes the gaming machine, with a number of games like “Command & Conquer”, “Alone in the Dark 2”, “Theme Park”, “Magic Carpet”, “Descent” and “Little Big Adventure” are marketed.

1996: Sergey Brin and Larry Page develop the Google search engine at Stanford University.

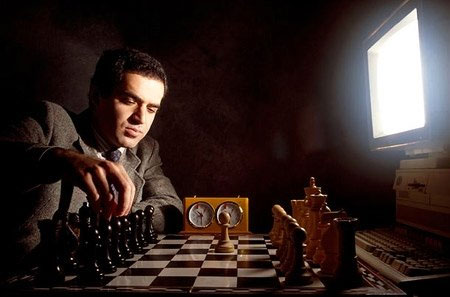

1997: Microsoft invests $ 150 million in then-struggling Apple terminates Apple’s lawsuit against Microsoft, alleging that Microsoft has copied the “interface” to Apple’s operating system. It was also around this time that the Deep Blue Project (which started at IBM in the late 1980s, created to solve difficult problems using parallel processing technology) defeated the champion. King of the world, Garry Kasparov.

World chess champion 1997, Garry Kasparov.

World chess champion 1997, Garry Kasparov.

1999: The term WiFi becomes part of the computer parlance and users begin to connect to the Internet wirelessly.

2001: Apple announces the Mac OS X operating system, which offers, among other things, a priority memory and multitasking architecture. Not to be outdone, Microsoft launched Windows XP, which featured a redesigned graphical interface with significant changes.

2003: The first 64-bit processor, the AMD Athlon 64, is released to the consumer market.

2004: Mozilla’s Firefox 1.0 challenges Microsoft’s Internet Explorer, the dominant web browser at the time. This is also the year that Facebook, a social networking site, was officially launched.

2005: Creation of YouTube, a video sharing service. Google acquired Android, a Linux-based mobile operating system.

2006: Apple introduces the MacBook Pro, Intel’s first dual-core mobile computer, as well as the Intel iMac. The same year, Nintendo Wii game consoles hit the market.

2007: The birth of the iPhone brings more computing functions to smartphones.

2009: Microsoft launches Windows 7, which offers the ability to pin applications to the taskbar, as well as advancements in touch recognition and handwriting, among many other features.

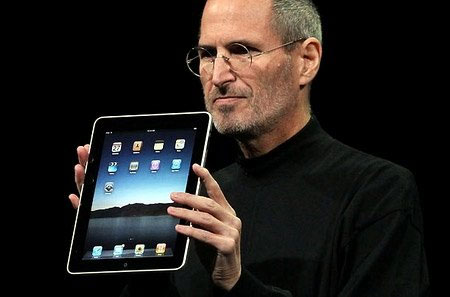

2010: Apple launches the iPad, changing the way consumers view the media and the start of the tablet segment is currently fairly quiet.

2011: Google launches Chromebook, the laptop computer running Google Chrome OS.

2012: Facebook reached 1 billion users on October 4.

2015: Apple launches the Apple Watch. Microsoft released Windows 10.

2016: Creation of the first programmable quantum computer. “Until now, there has been no quantum computing platform capable of programming new algorithms into the system. They are often designed to penetrate a specific algorithm,” said study author Chaianu. Debnath, quantum physicist and optical engineer at the University of Maryland, College Park.

2017: DARPA (Defense Advanced Research Projects Agency) develops a new “Molecular Informatics” program, using molecules as computers. “Chemistry provides a set of properties of wind. We can harness it to store and process information quickly,” Anne Fischer, program manager in the Department of Defense Science at DARPA, said in a statement.

“Millions of molecules exist and each has a unique three-dimensional atomic structure along with variables such as shape, size or even color. This abundance provides ample design space to explore new multivalued ways of encoding and processing data beyond the zeros and 1s of existing logical digital architectures.